Stable_video_style_transfer

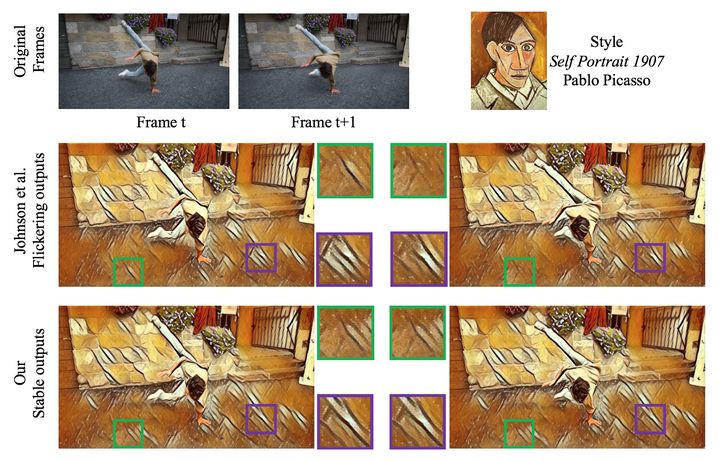

This project aims to deal with the flickering problem caused by naively applying per-frame stylization methods (e.g., Fast-Neural-Style and AdaIN) on videos.

1. Background

In 2016, Gatys et al. are the first to propose an image style transfer algorithm using deep neural networks, which is capable of transforming artistic style (e.g., colours, textures and brush strokes) from a given artistic image to arbitrary photos. The visual appealing results and elegant design of their approach motivate many researchers to dig in this field which is called Neural Artistic Style Transfer by followers. Along with the speedup (nearly real-time) of similar methods, researchers gradually turn their focus to video applications. However, naively applying these per-frame styling methods causes bad flickering problem which reflects on inconsistent textures among video adjacent frames.

To address the flickering problem, a few approaches made their attempts to achieve coherent video transfer results. In early stage, Anderson et al. and Ruder et al. are the very first to introduce temporal consistency by optical flow into video style transfer, and they achieve high coherent results but along with worse ghosting artefacts. Besides, their methods need 3 or 5 mins for each video frame which is less practical in video applications. Huang et al. and Gupta et al. propose real-time video style transfer by combining Fast-Neural-Style and temporal consistency. More recently, Chen et al. and Ruder et al. propose their methods to achieve more coherent results but sacrifice speed.

2. Motivation

We notice that all the methods aforementioned above are built upon feed-forward networks which are sensitive to small perturbations among adjacent frames, for example, lighting, noises and motions may cause large variations in stylised video frames. Thus there are still space to be improved. Besides, their networks are all in a per-network-per-style pattern, which means a training process is needed for each style and the training time may range from hours to days. In contrary, optimisation-based approaches are more stable for perturbations and naturally made for arbitrary styles. Thus we follow the optimisation-based routine.

Now we need to deal with the problems such as slow runtime and ghosting artefacts. We dig into the reason behind these problems, and observe that there are two drawbacks of previous optimisation-based methods (e.g., Anderson et al. and Ruder et al.): 1. their methods complete the entire style transformation for each video frame, which causes slow speed; 2. they have too much temporal consistency constraints between adjacent frames, which causes ghosting artefacts. To avoid these drawbacks, we come up with a straightforward idea that we only constrain loose temporal consistency among already stylised frames. In this way, the optimisation process only completes a light style transformation for producing seamless frames, thus it runs much more faster (around 1.8 second including per-frame stylising process) than previous methods (e.g., Anderson et al. and Ruder et al.).

Following this idea, we need to handle another two problems: 1. inconsistent textures between adjacent stylised frames due to flow errors (ghosting artefacts); 2. image degeneration after long-term running (blurriness artefacts).

3. Methodology

- Prevent flow errors (ghosting artefacts) via multi-scale flow, incremental mask and multi-frame fusion.

- Prevent image degeneration (blurriness artefacts) via sharpness loss consists of perceptual losses and pixel loss.

- Enhance temporal consistency with loose constraints on both rgb-level and feature level.

4. Qualitative Evaluation

4.1 Ablation study

4.2 Comparison to state-of-the-art methods

We compare our approach with state-of-the-art methods, and these experiments demonstrate that our method produces more stable and diverse stylised video than them.

5. More results

Here we show more video style transfer results by our approach with challenging style images.